NVIDIA Launches TensorRT-LLM to Enhance Large Language Model Performance on Its GPUs

NVIDIA introduces the new software platform, TensorRT-LLM, crafted to boost the efficiency of Large Language Models on the company's GPUs. Remarkably, this software will be supported by all current NVIDIA GPUs, including the likes of A100, H100, and more.

This platform offers significant performance improvements for language models, thanks to several innovative features. A key update is the new In-Flight batching scheduler, which enhances GPU efficiency by facilitating simultaneous multi-query processing.

Moreover, TensorRT-LLM is fine-tuned for the Hopper GPUs and offers automatic FP8 conversion, a DL compiler for kernel fusion, and a mixed precision optimizer.

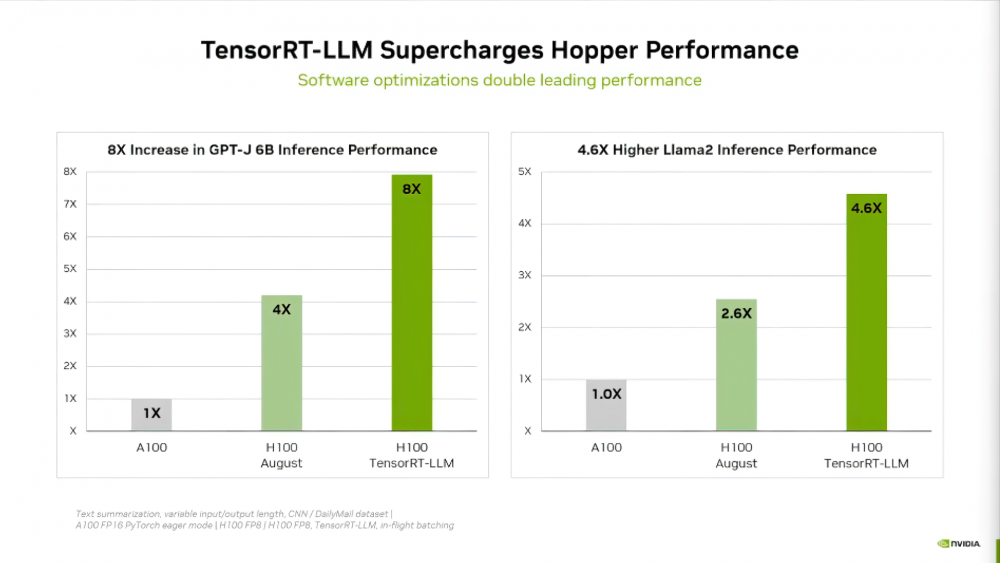

According to NVIDIA's data, the deployment of TensorRT-LLM doubled the performance of the H100 GPU in the GPT-J 6B test and provided up to a 5x boost in the Llama2 test. The company actively collaborates with industry giants like Meta and Grammarly to accelerate their language models using TensorRT-LLM.